google search model

구글 모델 서치는 기존 NAS(네트워크 아키텍처 탐색)의 단점을 보안하고, 효율적이고 자동으로 최적의 모델을 개발할 수 있도록 오픈소스로 제공되는 플랫폼입니다. 이러한 구글 모델 서치를 코랩에서 바로 구동해볼 수 있도록 테스트한 결과를 공유합니다. 깃헙 설치부터 패키지 설치, 환경 설정, 예제 소스 코드 설명 그리고 출력값을 확인해보도록 하겠습니다.

관련 정보

- 블로그 주소: https://ai.googleblog.com/2021/02/introducing-model-search-open-source.html

- 깃헙 주소: https://github.com/google/model_search

Machine-learning, deep-learning

DL/ML concept

for setup and basic lessons, see here schoolWiki

| Level of Big Data Maturity | Tools used |

|---|---|

|

|

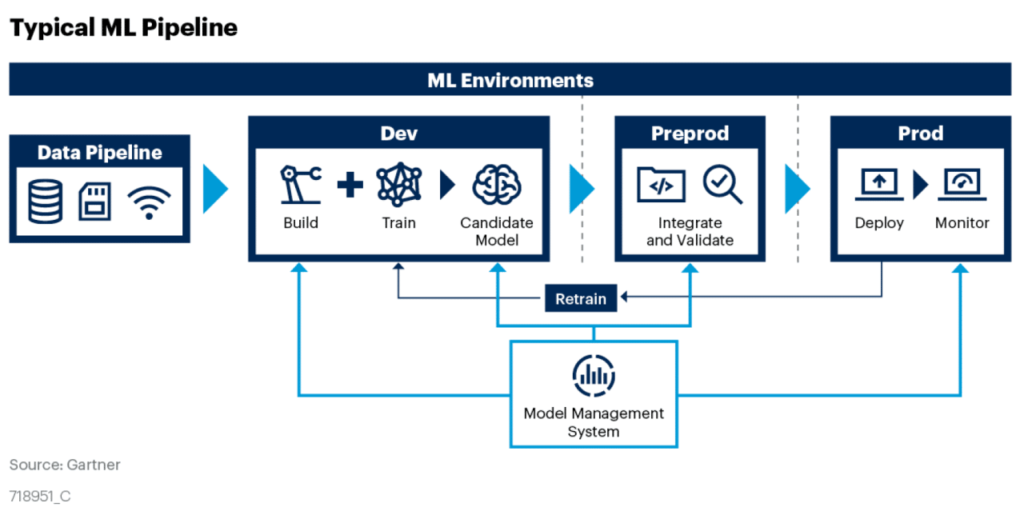

ml pipeline article_link

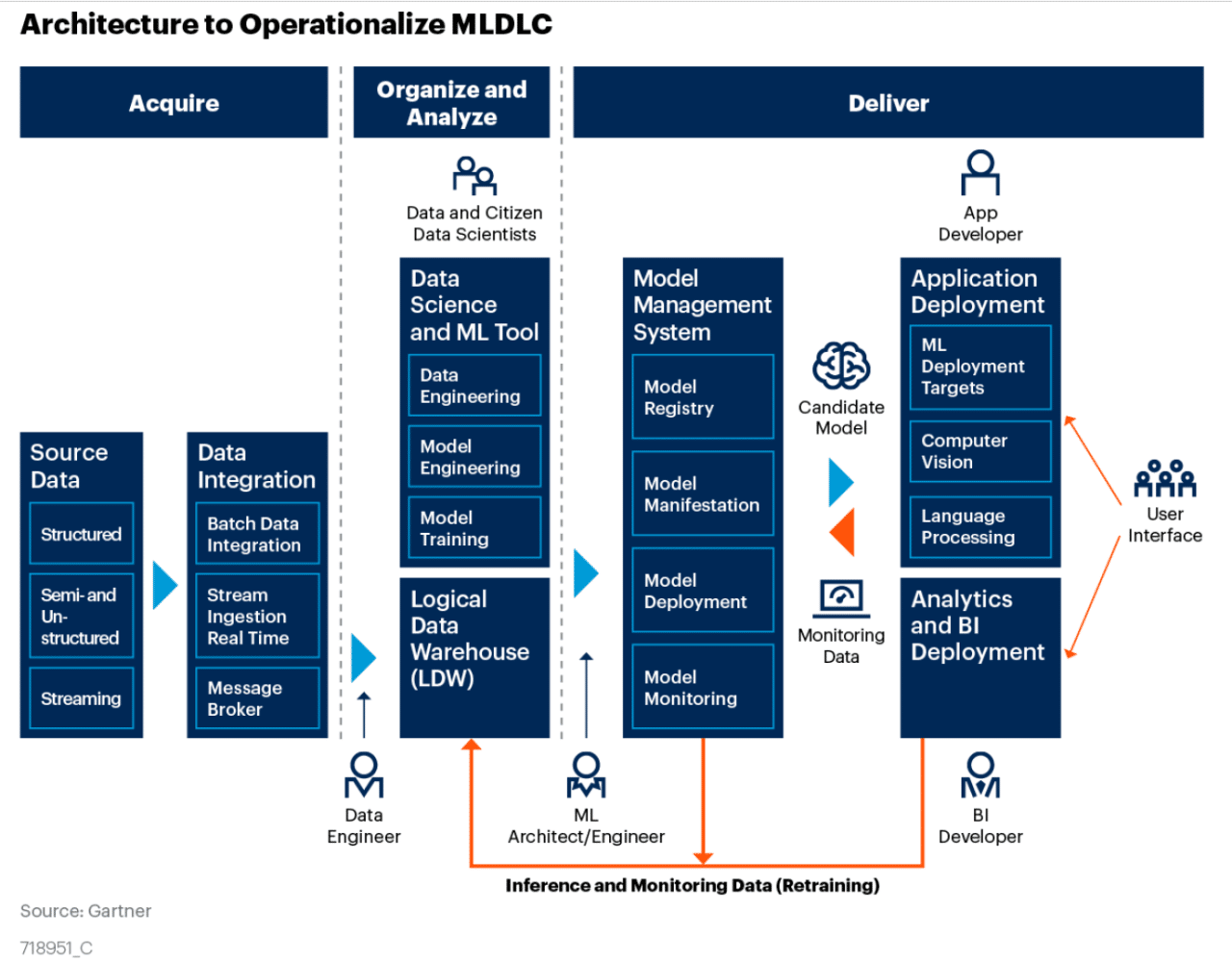

MLDLC architecture (same link as above)

500 + 𝗔𝗿𝘁𝗶𝗳𝗶𝗰𝗶𝗮𝗹 𝗜𝗻𝘁𝗲𝗹𝗹𝗶𝗴𝗲𝗻𝗰𝗲 𝗣𝗿𝗼𝗷𝗲𝗰𝘁 𝗟𝗶𝘀𝘁

500 AI Machine learning Deep learning Computer vision NLP Projects with code

This list is continuously updated. - You can take pull request and contribute.

| Sr No | Name | Link |

|---|---|---|

| 1 | 180 Machine learning Project | is.gd/MLtyGk |

| 2 | 12 Machine learning Object Detection | is.gd/jZMP1A |

| 3 | 20 NLP Project with Python | is.gd/jcMvjB |

| 4 | 10 Machine Learning Projects on Time Series Forecasting | is.gd/dOR66m |

| 5 | 20 Deep Learning Projects Solved and Explained with Python | is.gd/8Cv5EP |

| 6 | 20 Machine learning Project | is.gd/LZTF0J |

| 7 | 30 Python Project Solved and Explained | is.gd/xhT36v |

| 8 | Machine learning Course for Free | https://lnkd.in/ekCY8xw |

| 9 | 5 Web Scraping Projects with Python | is.gd/6XOTSn |

| 10 | 20 Machine Learning Projects on Future Prediction with Python | is.gd/xDKDkl |

| 11 | 4 Chatbot Project With Python | is.gd/LyZfXv |

| 12 | 7 Python Gui project | is.gd/0KPBvP |

| 13 | All Unsupervised learning Projects | is.gd/cz11Kv |

| 14 | 10 Machine learning Projects for Regression Analysis | is.gd/k8faV1 |

| 15 | 10 Machine learning Project for Classification with Python | is.gd/BJQjMN |

| 16 | 6 Sentimental Analysis Projects with python | is.gd/WeiE5p |

| 17 | 4 Recommendations Projects with Python | is.gd/pPHAP8 |

| 18 | 20 Deep learning Project with python | is.gd/l3OCJs |

| 19 | 5 COVID19 Projects with Python | is.gd/xFCnYi |

| 20 | 9 Computer Vision Project with python | is.gd/lrNybj |

| 21 | 8 Neural Network Project with python | is.gd/FCyOOf |

| 22 | 5 Machine learning Project for healthcare | link |

| 23 | 5 NLP Project with Python | link |

| 24 | 47 Machine Learning Projects for 2021 | link |

| 25 | 19 Artificial Intelligence Projects for 2021 | link |

| 26 | 28 Machine learning Projects for 2021 | link |

| 27 | 16 Data Science Projects with Source Code for 2021 | link |

| 28 | 24 Deep learning Projects with Source Code for 2021 | link |

| 29 | 25 Computer Vision Projects with Source Code for 2021 | link |

| 30 | 23 Iot Projects with Source Code for 2021 | link |

| 31 | 27 Django Projects with Source Code for 2021 | link |

| 32 | 37 Python Fun Projects with Code for 2021 | link |

| 33 | 500 + Top Deep learning Codes | link |

| 34 | 500 + Machine learning Codes | link |

| 35 | 20+ Machine Learning Datasets & Project Ideas | link |

| 36 | 1000+ Computer vision codes | link |

| 37 | 300 + Industry wise Real world projects with code | link |

| 38 | 1000 + Python Project Codes | link |

| 39 | 363 + NLP Project with Code | link |

| 40 | 50 + Code ML Models (For iOS 11) Projects | link |

| 41 | 180 + Pretrained Model Projects for Image, text, Audio and Video | link |

| 42 | 50 + Graph Classification Project List | link |

| 43 | 100 + Sentence Embedding(NLP Resources) | link |

| 44 | 100 + Production Machine learning Projects | link |

| 45 | 300 + Machine Learning Resources Collection | link |

| 46 | 70 + Awesome AI | link |

| 47 | 150 + Machine learning Project Ideas with code | link |

| 48 | 100 + AutoML Projects with code | link |

| 49 | 100 + Machine Learning Model Interpretability Code Frameworks | link |

| 50 | 120 + Multi Model Machine learning Code Projects | link |

| 51 | Awesome Chatbot Projects | link |

| 52 | Awesome ML Demo Project with iOS | link |

| 53 | 100 + Python based Machine learning Application Projects | link |

| 54 | 100 + Reproducible Research Projects of ML and DL | link |

| 55 | 25 + Python Projects | link |

| 56 | 8 + OpenCV Projects | link |

| 57 | 1000 + Awesome Deep learning Collection | link |

| 58 | 200 + Awesome NLP learning Collection | link |

| 59 | 200 + The Super Duper NLP Repo | link |

| 60 | 100 + NLP dataset for your Projects | link |

| 60 | 100 + NLP dataset for your Projects | link |

| 61 | 364 + Machine Learning Projects definition | link |

| 62 | 300+ Google Earth Engine Jupyter Notebooks to Analyze Geospatial Data | link |

| 63 | 1000 + Machine learning Projects Information | link |

| 64. | 11 Computer Vision Projects with code | link |

| 65. | 13 Computer Vision Projects with Code | link |

| 66. | 13 Cool Computer Vision GitHub Projects To Inspire You | link |

| 67. | Open-Source Computer Vision Projects (With Tutorials) | link |

| 68. | OpenCV Computer Vision Projects with Python | link |

| 69. | 100 + Computer vision Algorithm Implementation | link |

| 70. | 80 + Computer vision Learning code | link |

| 71. | Deep learning Treasure | link |

Road Lane line detection – CV Project

Lane Line detection is a critical component for self driving cars and also for computer vision in general. This concept is used to describe the path for self-driving cars and to avoid the risk of getting in another lane.

In this project, we will build a machine learning project to detect lane lines in real-time. We will do this using the concepts of computer vision using OpenCV library. To detect the lane we have to detect the white markings on both sides on the lane.

Road Lane-Line Detection with Python & OpenCV Using computer vision techniques in Python, we will identify road lane lines in which autonomous cars must run. This will be a critical part of autonomous cars, as the self-driving cars should not cross it’s lane and should not go in opposite lane to avoid accidents.

Frame Masking and Hough Line Transformation To detect white markings in the lane, first, we need to mask the rest part of the frame. We do this using frame masking. The frame is nothing but a NumPy array of image pixel values. To mask the unnecessary pixel of the frame, we simply update those pixel values to 0 in the NumPy array.

After making we need to detect lane lines. The technique used to detect mathematical shapes like this is called Hough Transform. Hough transformation can detect shapes like rectangles, circles, triangles, and lines.

Code Download Please download the source code: Lane Line Detection Project Code

Follow the below steps for lane line detection in Python:

1. preparation

import matplotlib.pyplot as plt

import numpy as np

import cv2

import os

import matplotlib.image as mpimg

from moviepy.editor import VideoFileClip

import math

## apply frame masking and find region of interest

def interested_region(img, vertices):

if len(img.shape) > 2:

mask_color_ignore = (255,) * img.shape[2]

else:

mask_color_ignore = 255

cv2.fillPoly(np.zeros_like(img), vertices, mask_color_ignore)

return cv2.bitwise_and(img, np.zeros_like(img))

## convert pixels to a line

def hough_lines(img, rho, theta, threshold, min_line_len, max_line_gap):

lines = cv2.HoughLinesP(img, rho, theta, threshold, np.array([]), minLineLength=min_line_len, maxLineGap=max_line_gap)

line_img = np.zeros((img.shape[0], img.shape[1], 3), dtype=np.uint8)

lines_drawn(line_img,lines)

return line_img

## create two lines per frame after Hough transformation

def lines_drawn(img, lines, color=[255, 0, 0], thickness=6):

global cache

global first_frame

slope_l, slope_r = [],[]

lane_l,lane_r = [],[]

α =0.2

for line in lines:

for x1,y1,x2,y2 in line:

slope = (y2-y1)/(x2-x1)

if slope > 0.4:

slope_r.append(slope)

lane_r.append(line)

elif slope < -0.4:

slope_l.append(slope)

lane_l.append(line)

img.shape[0] = min(y1,y2,img.shape[0])

if((len(lane_l) == 0) or (len(lane_r) == 0)):

print ('no lane detected')

return 1

slope_mean_l = np.mean(slope_l,axis =0)

slope_mean_r = np.mean(slope_r,axis =0)

mean_l = np.mean(np.array(lane_l),axis=0)

mean_r = np.mean(np.array(lane_r),axis=0)

if ((slope_mean_r == 0) or (slope_mean_l == 0 )):

print('dividing by zero')

return 1

x1_l = int((img.shape[0] - mean_l[0][1] - (slope_mean_l * mean_l[0][0]))/slope_mean_l)

x2_l = int((img.shape[0] - mean_l[0][1] - (slope_mean_l * mean_l[0][0]))/slope_mean_l)

x1_r = int((img.shape[0] - mean_r[0][1] - (slope_mean_r * mean_r[0][0]))/slope_mean_r)

x2_r = int((img.shape[0] - mean_r[0][1] - (slope_mean_r * mean_r[0][0]))/slope_mean_r)

if x1_l > x1_r:

x1_l = int((x1_l+x1_r)/2)

x1_r = x1_l

y1_l = int((slope_mean_l * x1_l ) + mean_l[0][1] - (slope_mean_l * mean_l[0][0]))

y1_r = int((slope_mean_r * x1_r ) + mean_r[0][1] - (slope_mean_r * mean_r[0][0]))

y2_l = int((slope_mean_l * x2_l ) + mean_l[0][1] - (slope_mean_l * mean_l[0][0]))

y2_r = int((slope_mean_r * x2_r ) + mean_r[0][1] - (slope_mean_r * mean_r[0][0]))

else:

y1_l = img.shape[0]

y2_l = img.shape[0]

y1_r = img.shape[0]

y2_r = img.shape[0]

present_frame = np.array([x1_l,y1_l,x2_l,y2_l,x1_r,y1_r,x2_r,y2_r],dtype ="float32")

if first_frame == 1:

next_frame = present_frame

first_frame = 0

else :

prev_frame = cache

next_frame = (1-α)*prev_frame+α*present_frame

cv2.line(img, (int(next_frame[0]), int(next_frame[1])), (int(next_frame[2]),int(next_frame[3])), color, thickness)

cv2.line(img, (int(next_frame[4]), int(next_frame[5])), (int(next_frame[6]),int(next_frame[7])), color, thickness)

cache = next_frame

## process each video for lane detection

def weighted_img(img, initial_img, α=0.8, β=1., λ=0.):

return cv2.addWeighted(initial_img, α, img, β, λ)

def process_image(image):

global first_frame

gray_image = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

img_hsv = cv2.cvtColor(image, cv2.COLOR_RGB2HSV)

lower_yellow = np.array([20, 100, 100], dtype = "uint8")

upper_yellow = np.array([30, 255, 255], dtype="uint8")

mask_yellow = cv2.inRange(img_hsv, lower_yellow, upper_yellow)

mask_white = cv2.inRange(gray_image, 200, 255)

mask_yw = cv2.bitwise_or(mask_white, mask_yellow)

mask_yw_image = cv2.bitwise_and(gray_image, mask_yw)

gauss_gray= cv2.GaussianBlur(mask_yw_image, (5, 5), 0)

canny_edges=cv2.Canny(gauss_gray, 50, 150)

imshape = image.shape

lower_left = [imshape[1]/9,imshape[0]]

lower_right = [imshape[1]-imshape[1]/9,imshape[0]]

top_left = [imshape[1]/2-imshape[1]/8,imshape[0]/2+imshape[0]/10]

top_right = [imshape[1]/2+imshape[1]/8,imshape[0]/2+imshape[0]/10]

vertices = [np.array([lower_left,top_left,top_right,lower_right],dtype=np.int32)]

roi_image = interested_region(canny_edges, vertices)

theta = np.pi/180

line_image = hough_lines(roi_image, 4, theta, 30, 100, 180)

result = weighted_img(line_image, image, α=0.8, β=1., λ=0.)

return result

## clip the input video to frames and get the resulant output video file

first_frame = 1

white_output = '__path_to_output_file__'

clip1 = VideoFileClip("__path_to_input_file__")

white_clip = clip1.fl_image(process_image)

white_clip.write_videofile(white_output, audio=False)

2. Lane line detection profject gui (code)

import tkinter as tk

from tkinter import *

import cv2

from PIL import Image, ImageTk

import os

import numpy as np

global last_frame1

last_frame1 = np.zeros((480, 640, 3), dtype=np.uint8)

global last_frame2

last_frame2 = np.zeros((480, 640, 3), dtype=np.uint8)

global cap1

global cap2

cap1 = cv2.VideoCapture("path_to_input_test_video")

cap2 = cv2.VideoCapture("path_to_resultant_lane_detected_video")

def show_vid():

if not cap1.isOpened():

print("cant open the camera1")

flag1, frame1 = cap1.read()

frame1 = cv2.resize(frame1,(400,500))

if flag1 is None:

print ("Major error!")

elif flag1:

global last_frame1

last_frame1 = frame1.copy()

pic = cv2.cvtColor(last_frame1, cv2.COLOR_BGR2RGB)

img = Image.fromarray(pic)

imgtk = ImageTk.PhotoImage(image=img)

lmain.imgtk = imgtk

lmain.configure(image=imgtk)

lmain.after(10, show_vid)

def show_vid2():

if not cap2.isOpened():

print("cant open the camera2")

flag2, frame2 = cap2.read()

frame2 = cv2.resize(frame2,(400,500))

if flag2 is None:

print ("Major error2!")

elif flag2:

global last_frame2

last_frame2 = frame2.copy()

pic2 = cv2.cvtColor(last_frame2, cv2.COLOR_BGR2RGB)

img2 = Image.fromarray(pic2)

img2tk = ImageTk.PhotoImage(image=img2)

lmain2.img2tk = img2tk

lmain2.configure(image=img2tk)

lmain2.after(10, show_vid2)

if __name__ == '__main__':

root=tk.Tk()

lmain = tk.Label(master=root)

lmain2 = tk.Label(master=root)

lmain.pack(side = LEFT)

lmain2.pack(side = RIGHT)

root.title("Lane-line detection")

root.geometry("900x700+100+10")

exitbutton = Button(root, text='Quit',fg="red",command= root.destroy).pack(side = BOTTOM,)

show_vid()

show_vid2()

root.mainloop()

cap.release()

Defect detection in ssd network

(in ultrasonic inspection) youtube

This project aims to develop a system using convolutional neutral networks (CNNs) to detect defects in composite laminate materials automatically in order to increase ultrasonic inspection accuracy and efficiency. For inspectors, ultrasonic testing is a labor-intensive and time-consuming manual task. This approach improves their efficiency, accuracy and reduces their workload when when interpreting ultrasonic scanning images to identify defects. Discontinuities and defects in materials are usually not specific shapes, positions, and orientations. A Jetson TX2 Developer Kit runs in real time an image analysis function using a Single Shot MultiBox Detector (SSD) network and computer vision trained on images of delamination defects. The SSD network can also evaluate components and specimens with other methods, such as thermography inspection.

Applying Evolutionary Artificial Neural Networks

A 2D Unity simulation in which cars learn to navigate themselves through different courses. The cars are steered by a feedforward Neural Network. The weights of the network are trained using a modified genetic algorithm. Short demo video of an early version: https://youtu.be/rEDzUT3ymw4

The Simulation

Cars have to navigate through a course without touching the walls or any other obstacles of the course. A car has five front-facing sensors which measure the distance to obstacles in a given direction. The readings of these sensors serve as the input of the car’s neural network. Each sensor points into a different direction, covering a front facing range of approximately 90 degrees. The maximum range of a sensor is 10 unity units. The output of the Neural Network then determines the car’s current engine and turning force.

If you would like to tinker with the parameters of the simulation, you can do so in the Unity Editor. If you would simply like to run the simulation with default parameters, you can start the built file Builds/Applying EANNs.exe.

The Neural Network

The Neural Network used is a standard, fully connected, feedforward Neural Network. It comprises 4 layers: an input layer with 5 neurons, two hidden layers with 4 and 3 neurons respectively and an output layer with 2 neurons. The code for the Neural Network can be found at UnityProject/Assets/Scripts/AI/NeuralNetworks/.

Training the Neural Network

The weights of the Neural Network are trained using an Evolutionary Algorithm known as the Genetic Algorithm.

At first there are N randomly initialised cars spawned. The best cars are then selected to be recombined with each other, creating new “offspring” cars. These offspring cars then form a new population of N cars and are also slightly mutated in order to inject some more diversity into the population. The newly created population of cars then tries to navigate the course again and the process of evaluation, selection, recombination and mutation starts again. One complete cycle from the evaluation of one population to the evaluation of the next is called a generation.

The generic version of a Genetic Algorithm can be found at UnityProject/Assets/Scripts/AI/Evolution/GeneticAlgorithm.cs. This class can be modified in a very easy way, by simply assigning your own methods to the delegate operator methods of the class. Some example code for adapting the Genetic Algorithm to your own needs can be found in the EvolutionManager UnityProject/Assets/Scripts/AI/Evolution/EvolutionManager.cs, which is already able to switch between two differently modified Genetic Algorithms.

User Interface

The user interface always displays the data of the current best car. In the top left corner the Neural Network’s output (engine and turning) is displayed. Right below the output, the evaluation value is displayed (the evaluation value is equal to the percentage of course completion). In the lower left corner a generation counter is displayed. In the upper right corner the Neural Network of the current best car is displayed. The weights are symbolised by the color and width of the connections between neurons: The wider a connection, the bigger the absolute value of the weight; Green means that the weight is positive, red means that the weight is negative.

The entire UI-code is located at UnityProject/Assets/Scripts/GUI/.

Courses

There are multiple courses of different difficulties which are all located in different unity scenes and can be found in the folder UnityProject/Assets/Scenes/Tracks/.

In order to start the simulation on a specific course, open the Main scene and enter the desired track-name (= scene name) in the Inspector of the GameStateManager object.