- facetracking

- arkit facetracking

- arcore facetracking

- arcore face tracking

- ARcore detection

- 3D model

- Tech list

facetracking

arkit facetracking

This article shows some of the requirements for Face Tracking and features available in ARKit3:

https://9to5mac.com/2019/06/04/arkit-3-device-support/

“People Occlusion and the use of motion capture, simultaneous front and back camera, and multiple face tracking are supported on devices with A12/A12X Bionic chips, ANE, and TrueDepth Camera.”

ARKit 3 goes further than ever before, naturally showing AR content in front of or behind people using People Occlusion, tracking up to three faces at a time, supporting collaborative sessions, and more. And now, you can take advantage of ARKit’s new awareness of people to integrate human movement into your app.

arcore facetracking

arcore face tracking

https://user-images.githubusercontent.com/42961200/177655205-f12e949d-781e-49d7-b0fa-4a62e5236ded.mp4

The Augmented Faces API allows you to render assets on top of human faces without using specialized hardware. It provides feature points that enable your app to automatically identify different regions of a detected face. Your app can then use those regions to overlay assets in a way that properly matches the contours of an individual face.

methods

카메라와 카메라 그리고 피사체의 공간 파악 카메라로 인식된 특정 인물 인식 특정인물의 신체적 특징 파악 가상공간의 리깅이 되어있는 캐릭터에 맵핑 실제 카메라의 팬/틸트 값의 전달 가상 카메라의 팬/틸트 값 반영

Methods

Here’s a simplified breakdown of the steps these artists follow:

- Tracking the position, shape and movement of the face relative to the camera in 3D

- Animation of the 3D models to snap on the tracked face (e.g. a dog nose)

- Lighting and rendering of the 3D models into 2D images

- Compositing of the rendered CGI images with the live action footage

4의 경우 3D foreground에 live background로 가능. 2. 3은 비디오 게임과 같이 처리.

요는 face tracking으로 구글이나 애플의 sdk를 활용 하거나, ML vision을 통해 직접 제작.

machine learning

object detection/tracking 머쉰러닝의 기본 구조

활용할 수 있는 모델은 다음과 같다. MobileNet, SqueezeNet and ShuffleNet

이중, ShuffleNet,

Feature learning called landmarks

dataset

Building a training dataset

For augmenting videos with 3D effects, look for a dataset with 3D landmark coordinates such as 300W-LP.

- apply the following transformations to each image and landmark, to create new ones, by a random amount: rotation up to -/+ 40° around the centre, up to 10% translation and scale, and horizontal flip

- apply a different random transformation in memory on each image and for each learning pass (epoch) for additional augmentation.

- build a Linux VM with a GeForce GTX 1080 Ti, prepare an OS and storage image where I setup PyTorch and upload my code and the datasets, all through ssh. Once the system is setup, it can be started and shut down on demand, creating a snapshot allows to resume the work

camera operation

카메라 구동부 Raspberry pi Aduino Python ROS1

Singal processing

신호 처리부 Ubuntu 20.04 Python 3.8 OpenCV ROS1

vision processing

영상 처리부 Windows 10 Unreal Engine C++ ROS1

차세대 성장 동력으로 표현되는 메타버스와 관련된 많은 아이템 중에 Unity나 Unreal Engine 등의 게임 엔진을 활용한 ‘시네마틱’이 있는데, 이 시네마틱으로 구현되는 영화나 에니메이션(만화)에 등장하는 케릭터(인물)의 움직임(에니메이션)을 구현하기 위해서는 슈트 형식의 모션캡쳐 장비를 활용하거나 오큘러스 등의 VR기기의 트랙커 등을 활용하여 모션 캡쳐에 활용하는 것이 아직까지의 기술에 한계였습니다.

아직도 VR기기의 무게는 상당하고 그 조작을 위한 장비의 기구적 한계 때문에디테일한 표현이 되지 않거나 숙련된 3D 작업자들이 상당한 후반 작업을 해야만하는 점 때문에 아직까지도 저효율 고비용의 상태입니다.

ARcore detection

machine learning

https://www.hackster.io/gr1m/raspberry-pi-facial-recognition-16e34e

https://aws.amazon.com/rekognition/

Description

Pi-detector is used with Pi-Timolo to search motion generated images for face matches by leveraging AWS Rekognition. In its current state, matches are wrote to event.log. With some additional creativity and work, you could send out a notification or allow/deny access to a room with minimal changes. The install script will place the appropriate files in /etc/rc.loal to start on boot.

Build Requirements

Raspberry Pi (Tested with Rpi 3) Picamera AWS Rekognition Access (Free tier options available) As an alternative, this set of scripts can be modified to watch any directory that contains images. For example, if you collect still images from another camera and save them to disk, you can alter the image path to run facial recognition against any new photo that is created.

AWS Rekognition

Before installing, it is best to get up and running on AWS. For my project, I am using the AWS Free Tier service. Using this allows you 5000 API calls per month, which is good enough for this project. Login to your console and create a new IAM user that has Administrative rights to Rekognition.

Install

Setup a Raspberry Pi with Raspbian Jessie

SSH into your Raspberry pi (or connect it to a monitor and login using pi as the username and raspberry as the password). Don’t forget to change the below IP address to your pi’s IP. If you need help finding it on the network use nmap (nmap -sn 192.168.1.0/24)

Clone this repo and install:

git clone https://github.com/af001/pi-detector.git

cd pi-detector/scripts

sudo chmod +x install.sh

sudo ./install.sh

During the install, you will be prompted for your aws credentials that you set up earlier. When asked, enter your AWS Secret Key ID, AWS Secret Access Key, and set the region to us-east-1 (adjust to match the region you chose when you set up AWS Rekognition earlier). An example output would look something similar to the image below:

Getting started

First, you need to create a new collection on AWS Rekognition. Creating a ‘home’ collection would look like:

cd pi-detector/scripts

python add_collection.py -n 'home'

Next, add your images to the pi-detector/faces folder. The more images of a person the better results you will get for detection. I would recommend several different poses in different lighting.

cd pi-detector/faces

python ../scripts/add_image.py -i 'image.jpg' -c 'home' -l 'Tom'

I found the best results by taking a photo in the same area that the camera will be placed, and by using the picam. If you want to do this, I created a small python script to take a photo with a 10 second delay and then puts it into the pi-detector/faces folder. To use it:

cd pi-detector/scripts

python take_selfie.py

Once complete, you can go back and rename the file and repeat the steps above to add your images to AWS Rekognition. Once you create a new collection, or add a new image, two reference files will be created as a future reference. These are useful if you plan on deleting images or collections in the future.

At this point, the setup is ready to go. You can setup Wi-Fi on your Rpi and place the camera where you want in your home. Once you plug in the Rpi, it should start working with no additional work needed from the user. To check your logs, just ssh into the Rpi and check the event.log folder for a reference to your detections.

To delete a face from your collection, use the following:

cd pi-detector/scripts

python del_faces.py -i '000-000-000-000' -c 'home'

If you need to find the image id or a collection name, reference your faces.txt and collections.txt files.

To remove a collection:

cd pi-detector/scripts

python del_collections.py -c 'home'

Note that the above will also delete all the faces you have stored in AWS.

The last script is facematch.py. If you have images updated and just want to test static photos against the faces you have stored on AWS, do the following:

cd pi-detector/scripts

python facematch.py -i 'tom.jpg' -c 'home'

The results will be printed to screen, to include the percentage of similarity and confidence.

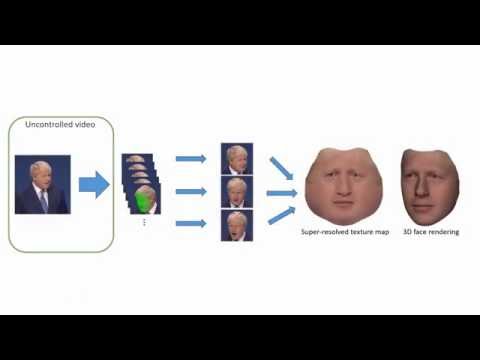

3D model

3D model

To be able to build an app like Snapchat, we actually need more than a couple of 3D points. A common approach is to use a 3D Morphable Face Model (3DMM) and fit it on the annotated 3D points. The dataset 300W-LP actually comes with such data. The network could learn all the vertices of the 3D model instead of a small amount of landmarks, such that a 3D face will be predicted directly by the model and ready to use for some AR fun!

texture

The texture consists of eye shadow, freckles, and other coloring.

The texture consists of eye shadow, freckles, and other coloring.

application of Kinect SDK

to practice this feature using Blender

to outfit the player with custom costumes for body tracking

Tech list

- to do list for future mini projects

- my museum

- face tracking

- aquarium ar 📦 arkit

- geo location based app

- pokemon go clone 👎🏽 vuforia mapbox geo-location backend

- dynamic image/video 3d model upload system 🦘 vuforia mapbox geo-location

- restaurant menu app 🧷 arkit 3d app-design backend 👶🏽 arkit 3d

- live animal 4d 🥇 vuforia, mapbox, geolocation backend-api

- pro boxing with politicians 👍🏽 unity arkit

- ar color stack 👍🏽 arkit

- 3d dynamics books 💯 unity vuforia android iphone

- furniture ar 🔢 unity easyar plane-detection backend-api

- volve show with ar 👍🏽 unity vuforia 3d

- zoomerang for swimming pool with arkit : unity arkit

- living texture - unity vuforia 3d

The following wiki, pages and posts are tagged with

| Title | Type | Excerpt |

|---|---|---|

| xr vr mini project list | post | Sat, Jul 09, 22, facemask and sports kinect using object tracking and facial landmark |

| class contents of my xr ar vr courseworks | post | Fri, Jul 22, 22, my xr course began early June of 2022 and will end in later November, and I will keep the gist of course contents here |

| Unreal Summit for realtime 3d interactive contents creators | post | Tue, Sep 27, 22, Session summary of the Unreal Summit for contents creators |

{# nothing on index to avoid visible raw text #}